The Node.js security releases—initially queued for mid‑December—were rescheduled to January 7, 2026. That buys time, but it doesn’t reduce risk. If Node is your runtime, use the next couple of weeks to harden pipelines, rehearse upgrades, and pre‑stage artifacts so that shipping on Jan 7 is a calm push, not a fire drill. In this guide I’ll lay out a practical plan to navigate the Node.js security releases without breaking prod, and I’ll map the runtime patches to the React/Next.js security work many teams just finished.

What exactly changed—and why the delay matters

Maintainers signaled multiple high‑severity issues affecting the 25.x, 24.x, 22.x, and 20.x lines, alongside medium and low items. The release moved to Jan 7 to give contributors enough space to finish a hard patch and to avoid holiday disruption. For most companies, that timing intersects with code freezes, thin on‑call rotations, and peak traffic. Translation: the risk profile shifts from “rush now” to “prepare deliberately, then ship quickly.”

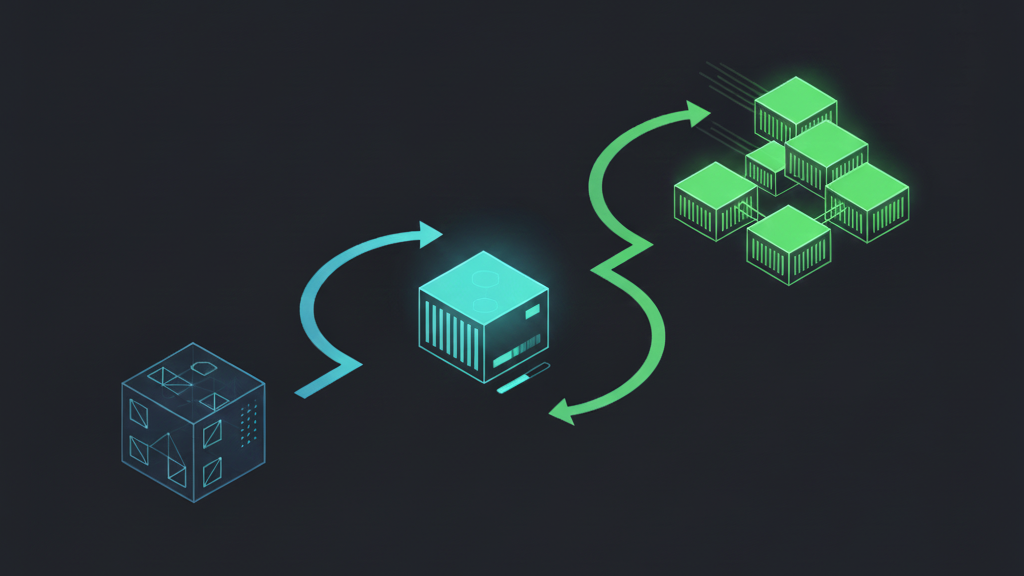

Here’s the thing: runtime upgrades ripple. You’ll touch Docker base images, native addons, CI caches, OpenSSL behavior, health checks, and sometimes glibc quirks. Waiting until the week of Jan 6 to think about this is how incidents start.

A holiday‑safe patch runbook for Jan 7

Use this step‑by‑step flow. It’s tuned for teams running Node across APIs, background jobs, and edge functions.

1) Inventory and risk bucket (today)

Build a runtime bill of materials. For each service: Node major/minor, OS base, libc, OpenSSL, native modules, package manager (npm/pnpm/yarn) version, and whether the service handles untrusted input. Mark internet‑facing and high‑privilege services as Tier A. Everything else falls into Tier B or C. The security releases hit all supported lines, so your risk is more about exposure and blast radius than version bragging rights.

2) Freeze drift, pin images (today)

Lock Docker bases by digest, not tag. If you’re using official Node images, resolve the current digest and bake it into your Dockerfiles. In CI, cache npm/pnpm aggressively but capture the lockfile hash to prevent partial upgrades. The goal is predictable rebuilds now and a clean diff when you move to patched Node builds in January.

3) Native module rehearsal (next 48 hours)

Identify native dependencies (bcrypt, sharp, canvas, node‑sass, grpc, etc.). Recompile them on a staging host that matches your production OS and CPU family. If you run Alpine with musl, do it there; if you run Ubuntu Jammy with glibc, do it there. Record which packages failed and the exact error messages. Create a one‑page “known issues” doc for release day.

4) Canary environment (this week)

Stand up a canary environment that mirrors production traffic patterns. Wire it to receive 1–5% of prod traffic via your gateway, but keep the canary behind a manual flag. You’ll swap Node base images and flip traffic on Jan 7, with instant rollback baked in.

5) Dependency alignment with Next.js and React (this week)

If you ship Next.js with the App Router, you likely spent early December patching the React Server Components RCE and follow‑on bugs. Make sure your app is on the fixed Next.js lines today so the runtime upgrade doesn’t collide with framework churn. The currently recommended versions include: 14.2.35 (14.x), 15.0.7, 15.1.11, 15.2.8, 15.3.8, 15.4.10, 15.5.9, and 16.0.10; canary channels landed 15.6.0‑canary.60 and 16.1.0‑canary.19. If you’re not there, prioritize that work now.

Need a refresher on the React/Next.js incidents and patch tactics? See our playbooks: Next.js Security Update: Patch, Prove, Repeat and React2Shell: What Now? Secure Next.js This Week.

6) Observability guardrails (this week)

Before the holiday, raise signal on four things per service: process restarts, memory growth, event loop lag, and TLS error rates. Add explicit alerts for “new pod image digest” and “Node.version changed” so ops doesn’t hunt for the needle when a canary misbehaves. Capture a 24‑hour baseline now; you’ll compare against it post‑upgrade.

7) Release‑day choreography (Jan 7)

On the morning of Jan 7, swap your base images to the patched Node builds. Rebuild tier‑A services first, warm the canary, then shift 10% traffic. Hold for 15–30 minutes while you watch p95 latency, error rates, and worker exit codes. If clean, step to 50%, then 100%. Tier‑B and C follow. Rollback is “revert the digest,” not “rebuild from scratch.”

People also ask: Are Node.js apps safe to run until January 7?

“Safe” is the wrong frame. The disclosed issues are being addressed, and the team chose a date that minimizes holiday risk while enabling a complete fix. If your app is properly containerized, patched at the OS level, and shielded with a WAF and rate limits, your residual risk is lower—but not zero. The right move is to harden inputs, turn on extra logging for suspicious patterns, and get your upgrade muscle memory ready now. Use the time; don’t idle it away.

People also ask: Which versions will receive patches?

The maintainers indicated patches for active lines: 25.x (current), 24.x (LTS), 22.x (LTS), and 20.x (LTS). If you’re on anything older, you’re outside standard support and should plan an accelerated migration. If you must keep an EOL line alive, lock it behind compensating controls—private networks, strict firewall rules, and a front proxy that normalizes traffic.

Map the runtime fixes to your application layer

Security releases are not isolated events. If you’re running Next.js with the App Router, your December probably included a patch sprint around the React Server Components protocol. The RCE (CVSS 10) was addressed in the React stack on December 3, followed by two additional RSC flaws acknowledged on December 11 with patched Next.js versions across 14.x, 15.x, and 16.x lines. The RCE fix remains effective; the later issues required their own upgrades. The practical implication: don’t let your framework and runtime upgrades drift out of sync. Land the framework patches before Jan 7 so your Node rollout is one variable, not two.

Node.js security releases: what to prepare now

Use this short checklist to prep without burning your holiday:

- Create a spreadsheet (or a script) that outputs: service name, Node version, base image digest, native modules present, external exposure, and owner.

- Pre‑compile native modules on a staging host that matches production. Store the artifacts in a private registry to speed rebuilds.

- Snapshot lockfiles today. When you update only Node on Jan 7, you’ll avoid surprise transitive bumps.

- Test TLS handshakes against your upstreams (databases, Kafka, payment gateways). Runtime OpenSSL changes can surface here first.

- Document a one‑click rollback for each service: the previous image digest and a command snippet ops can run half‑asleep.

If you’d like a deeper, hour‑by‑hour drill, our earlier incident plans still apply. Start with the 48‑hour Node.js patch plan and the Dec 18 security release playbook—the dates changed, but the mechanics hold.

Performance and compatibility pitfalls to expect

Most security releases are drop‑in replacements, but there are three categories where teams stumble:

1) Native addon ABI mismatches

If the patched Node build increments V8 or N‑API internals, some native addons will demand a rebuild. That can surface as crashes on module load, segfaults under load, or “module not found” errors masked by lazy imports. Catch these in staging with synthetics that exercise all code paths, not just the happy path.

2) TLS and crypto edges

Changes in OpenSSL defaults can break upstreams that rely on older ciphers or renegotiation behavior. Pre‑flight with integration tests against sandbox environments for your payment provider, message bus, and data warehouse. If you terminate TLS at a proxy, verify both sides—the proxy and Node’s client libraries.

3) Container base drift

Pulling “latest” on release day is how you introduce a second, invisible change: a newer glibc or musl, or a CA bundle update that surprises a subset of endpoints. Use pinned digests and renovate them deliberately.

How to communicate the plan to leadership

Executives don’t want vulnerability acronyms; they want blast radius and downtime answers. Send a two‑slide memo:

- Slide 1: “What/When/Impact” — security patches across Node 25/24/22/20 on January 7; we’ve pre‑staged builds and can roll out with under 60 minutes of elevated risk per tier.

- Slide 2: “Controls and Rollback” — canary release, real‑time telemetry, one‑click rollback by image digest; no change freeze violation required.

Offer a dry‑run date in the final week of December for Tier‑B services. Stakeholders relax when they see rehearsal artifacts.

Does this affect our SLOs or compliance?

Probably not, if you handle it as a controlled change. For SOC 2 and ISO 27001, the key is documenting that you evaluated vendor advisories promptly, prepared a mitigation plan, and executed within a reasonable window. The Jan 7 timing gives you enough runway to log decisions, approvals, and test evidence in your change management system.

Quick commands and config patterns that help

Keep it simple and scriptable:

- Pin images: record your current digest with docker inspect <image> --format '{{.RepoDigests}}' and paste it into your Dockerfile FROM line.

- Runtime parity: expose process.versions on a debug endpoint in non‑prod. Snap it before and after the upgrade.

- Health checks: add a lightweight “crypto and DNS” check to catch OpenSSL or resolver regressions quickly.

- Rollout gates: require clean p95 latency deltas (±5%), error rates (±0.2%), and zero unexpected restarts before advancing traffic.

Zooming out: December’s security drumbeat isn’t slowing

Between high‑severity Node advisories and recent browser/WebKit fixes, December has been a busy security month. The signal is clear: treat runtime and framework advisories as operational events. Bake response muscle into your regular cadence—just like you do for capacity and cost.

What to do next

For developers:

- Get every service on the fixed Next.js lines if you use the App Router (14.2.35; 15.0.7/15.1.11/15.2.8/15.3.8/15.4.10/15.5.9; 16.0.10; canaries 15.6.0‑canary.60 and 16.1.0‑canary.19).

- Pre‑compile native addons on a prod‑like host and stash the artifacts.

- Stand up a canary with automated rollback and traffic steps.

- Capture a telemetry baseline now; alert on Node version and image digest changes.

For business owners and engineering leaders:

- Approve a narrow change window on January 7 with a named incident commander.

- Require a rollback plan per service and a one‑page risk register.

- Keep customer‑facing comms ready but unannounced; you probably won’t need them if you rehearse.

Want help shipping this safely?

If you don’t have the bench to prep and execute across dozens of services, bring in a partner to share the load. We’ve already shipped multiple incident‑driven patch plans this month. Start with our 72‑hour Node.js release checklist, then reach out via our services or contact pages to set up a short, tactical engagement.

FAQ: Will the Node.js security releases cause breaking changes?

Security releases aim to be drop‑in. Where teams hit bumps, it’s usually ecosystem fallout—native addons, OpenSSL defaults, or container base drift—not intentional API breaks. That’s why this plan focuses on reproducibility and canaries. Do the prep now, and Jan 7 becomes a routine morning.

Comments

Be the first to comment.