Node.js January 2026 Security Release: What to Do

The Node.js January 2026 security release landed on January 13 with coordinated updates to 20.20.0, 22.22.0, 24.13.0, and 25.3.0. Across the lines, it addresses eight CVEs (three High, four Medium, one Low) and bumps key dependencies like undici and c‑ares. If you maintain web APIs, workers, or CLI tools in production, treat this as a priority sprint—not a background chore.

What’s actually in the Node.js January 2026 security release?

Here’s the short version developers and SREs need. The release closes a handful of bugs that span memory confidentiality, permission model enforcement, TLS callback handling, HTTP/2 crash resilience, and a targeted patch only relevant to the 24.x line.

Highlights you should map to your code paths immediately:

• Buffer allocation race condition (High): Under precise timing when the vm module is used with a timeout, Buffer.alloc and typed arrays could end up non‑zeroed, risking leakage of in‑process secrets. If you host untrusted scripts or accept user‑controlled inputs that influence workload timing, don’t sit on this one.

• Permission model symlink bypass (High): With crafted symlink chains, scripts could escape intended --allow-fs-read/--allow-fs-write boundaries. If you sandbox tools or run build steps with the permission model, this undermines the boundary you think you have.

• HTTP/2 malformed HEADERS DoS (High): A bad HEADERS frame could crash a process by surfacing a low‑level TLS socket error without a handler. The fix hardens default behavior, but you should still attach explicit error handlers on secure sockets in high‑exposure services.

• async_hooks uncatchable stack overflow (Medium): Under certain recursion patterns with async_hooks or AsyncLocalStorage, processes could terminate outside normal error handling. The patch routes these correctly, improving recoverability.

• TLS client certificate path leak (Medium, 24.x only): A memory leak when converting X.509 fields could grow memory steadily on repeated client auth handshakes. If you run mutual TLS on 24.x, you benefit directly.

• Permission model UDS bypass (Medium, 25.x only): On 25.x, local Unix Domain Socket connections could slip past network restrictions with --permission. If you gate IPC access via that boundary, upgrade.

• TLS callback exception handling (Medium): Exceptions thrown inside PSK/ALPN callbacks could bypass standard handlers, leading to process crashes or file descriptor leaks. If you negotiate ALPN heavily (HTTP/2, HTTP/3 gateways) or use PSK, this is relevant.

• fs.futimes() bypass on read‑only (Low): Timestamps could be modified even under read‑only constraints. That’s not a common production breaker but consider audit implications.

Which versions are patched?

Patched releases are 20.20.0 (LTS), 22.22.0 (LTS), 24.13.0 (Active LTS), and 25.3.0 (Current). If your fleet isn’t on one of these, you’re carrying known risk today. Undici was refreshed across lines, and c‑ares is at 1.34.6, which matters for DNS resolution reliability and security.

Is the Node.js January 2026 security release critical for my app?

Probably yes if any of these describe you:

• You serve public traffic over HTTP/2.

• You use AsyncLocalStorage for tracing, context propagation, or APM.

• You lean on the experimental permission model in CI tools, scripts, or production sandboxes.

• You terminate TLS and hook ALPN or PSK callbacks (edge proxies, internal mesh gateways, or custom mutual TLS).

• You run 24.x with client certificate auth.

Note: end‑of‑life lines such as Node 18.x no longer receive upstream fixes. If you’re still on 18.x, plan the jump to 22.x or 24.x now and treat this month’s security work as your forcing function.

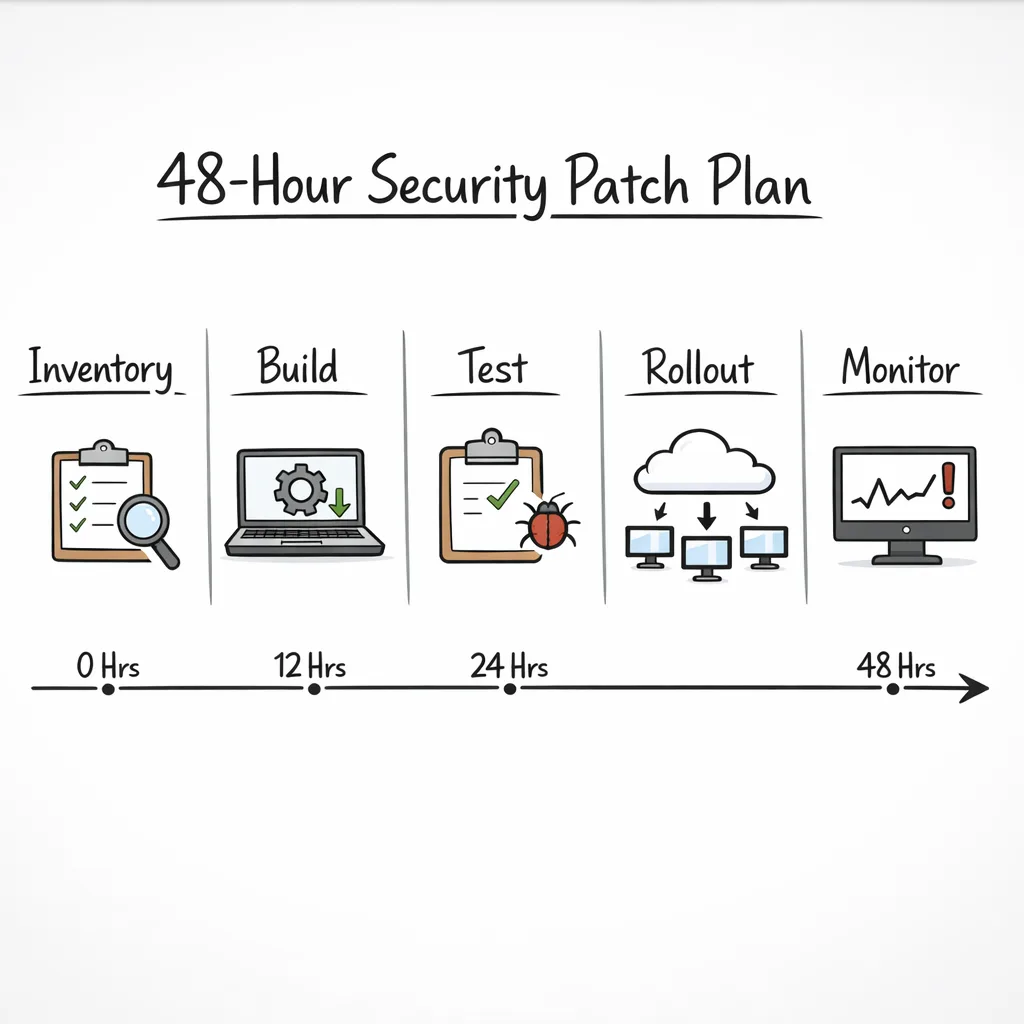

The 48‑hour patch plan (use this with your team)

Here’s a time‑boxed, field‑tested approach that gets most organizations to safe ground without freezing delivery.

Hour 0–2: Inventory and exposure mapping

• Snapshot runtime versions for every service and job (node -v, container base images, Lambda/Functions, CI runners). Don’t forget cron boxes and ephemeral workers.

• Map CVEs to features actually used: HTTP/2, TLS callbacks, permission model, vm with timeouts, mTLS.

• Classify services by blast radius: external‑facing, partner‑facing, internal only, CI/build scripts. Prioritize anything exposed to the internet and anything that can move data across boundaries.

Hour 2–8: Pick your branch and cut builds

• If you’re on 24.x today, go to 24.13.0. On 22.x, go to 22.22.0. On 20.x, go to 20.20.0. On 25.x, go to 25.3.0.

• Rebuild images and lock Node version explicitly in your Dockerfiles/base images. Verify undici and native addons rebuild cleanly.

• For Serverless, switch the runtime where applicable or vendor the patched binary in custom layers if the platform lags. For containerized workloads, push a new tag and wire it into your deploy pipeline.

Hour 8–18: Targeted tests where it matters

• HTTP/2 servers: hammer with malformed and oversized headers; verify the process no longer crashes and that errors are logged and contained.

• TLS paths with ALPN/PSK: simulate exceptions in callbacks and confirm they flow through error handlers; check for file descriptor stability under load.

• AsyncLocalStorage users: run deep async graphs (load + traces) and confirm no uncatchable stack overflow surface remains.

• Permission model: attempt symlink escapes and UDS connections from restricted contexts; confirm failures are enforced.

• 24.x with client certs: run mTLS load tests and watch memory over time.

Hour 18–36: Staged rollout with guardrails

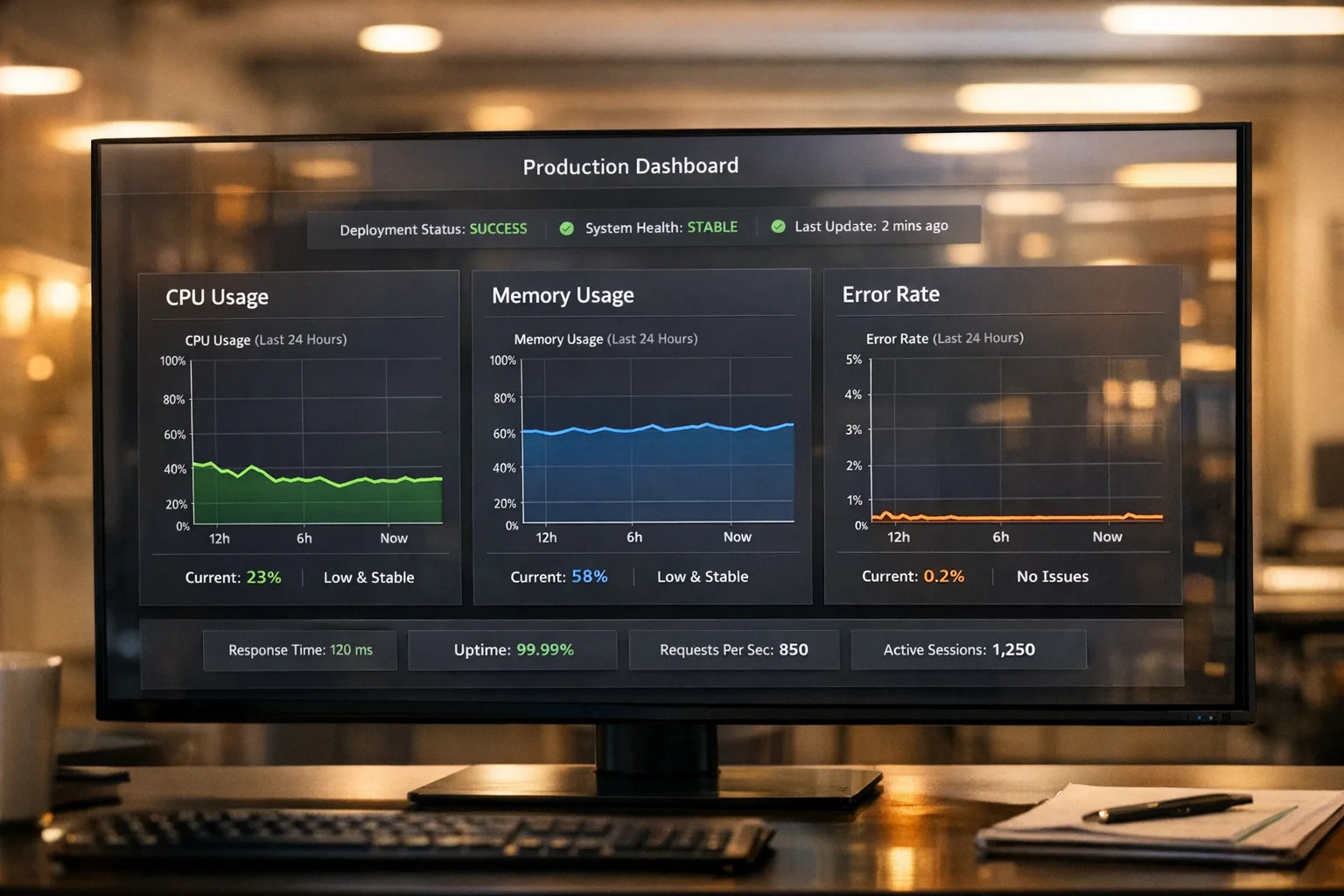

• Roll out to a small percent or a single AZ/region. Enable canary alarms for crash rates, RSS growth, FD count, and 5xx spikes.

• Keep deploys small and reversible; one service at a time beats a big‑bang release.

• Watch for subtle changes in DNS behavior (c‑ares update) and HTTP semantics (undici). Fix config drift early.

Hour 36–48: Finish the fleet and close the loop

• Complete the rollout, update your runtime matrix, and put a reminder to remove any temporary mitigations you added.

• Document anything non‑obvious—CI images, base AMIs, and sidecars are easy to miss and will bite you next time.

Quick mitigations if you can’t upgrade today

Upgrading is the right answer. If you’re blocked and need breathing room:

• HTTP/2 crash surface: explicitly attach error handlers to secure sockets and consider rate‑limiting or temporarily downgrading to HTTP/1.1 for high‑risk endpoints.

• Permission model: avoid relying on the permission model for hard isolation this week; run sensitive steps in separate containers or VMs.

• TLS callbacks: wrap PSK/ALPN callbacks defensively with try/catch and fail‑closed behavior; add per‑connection timeouts and connection caps.

• vm + timeout usage: disable untrusted guest code paths or isolate them in a separate process/pod with a minimal permission footprint.

Testing checklist you can copy

Use this as a runbook with your QA or SRE lead:

• Crash containment: prove the process stays up under malformed HTTP/2 inputs and that alarms fire as expected.

• Error routing: intentionally throw in TLS callbacks and confirm you hit your centralized error handler and observability pipeline.

• Memory: baseline RSS before and after upgrade, then run a 30‑minute soak test. Pay special attention on 24.x mTLS endpoints.

• DNS: tail logs for resolution timeouts or oddities after the c‑ares bump; confirm connection retry behavior is unchanged.

• Async context integrity: verify AsyncLocalStorage context does not bleed across concurrent requests under load.

• Native modules: rebuild and smoke‑test node‑gyp addons; ABI compatibility should be stable but verify startup and hot paths.

Dependency notes that matter (undici and c‑ares)

Undici and the fetch API are embedded in most modern Node projects, even if you didn’t add them directly. The security release updates undici across supported lines. That can subtly change connection pooling, header casing, or timeout behavior if you were relying on edge cases. Keep an eye on outbound HTTP error rates and retry patterns post‑upgrade.

c‑ares handles async DNS resolution. The jump to 1.34.6 tidies security and correctness; in operational terms, it’s a good idea to double‑check your resolver settings in container networks and confirm you’re not masking DNS faults with aggressive retries.

People also ask

Do I need to restart every container and function?

Yes. The patched Node.js binary only takes effect for processes started after the upgrade. For long‑running hosts, cycle the node processes or recycle the nodes. For serverless, publish a new version/alias or redeploy with the patched runtime.

We’re pinned to Node 18.x—what’s the quickest path off it?

Target 22.x if you want a long runway; target 24.x if you’re ready to align with the current LTS. Keep the diff minimal: upgrade Node, rebuild native modules, run your integration tests, then deal with optional features later. If you need a concrete one‑week plan, see our 7‑day ship plan for age‑rating workflows—it’s a different topic, but the cadence matches how we push time‑boxed upgrades.

Can we mitigate without upgrading?

You can blunt specific risks (socket error handlers, disabling high‑risk features) but those should be temporary. The correct mitigation is to upgrade to one of the patched versions.

Will this break my observability stack?

If you rely on AsyncLocalStorage for trace context, the change is stabilizing, not breaking. Still, run a load test and ensure spans/trace IDs stitch correctly through hot paths.

About those OpenSSL advisories this month

January also brought a batch of OpenSSL CVEs. After assessment, the Node.js team determined only a subset with low to moderate impact affects Node’s PFX path and will roll into regular releases rather than emergency security drops. Translation: keep your normal update cadence for OpenSSL‑related items, but don’t let it distract you from this week’s Node upgrade.

A pragmatic triage matrix

When you’re looking at a stack of tickets and pager fatigue is real, use this matrix to focus effort where it pays off:

• External HTTP/2 APIs: Patch first. Confirm socket error handling, run canaries, watch crash metrics.

• TLS edge with ALPN/PSK: Patch and simulate exceptions; verify descriptors aren’t leaking under burst traffic.

• 24.x mTLS backends: Patch, run 30‑minute soak with certificates; watch memory.

• Permission‑model sandboxes: Patch and consider relocating sensitive steps into isolated containers this week.

• Internal‑only batch jobs without these features: Patch within the sprint; lower blast radius but keep pace.

Real‑world deployment gotchas

• CI workers often pin Node via an old base image. Update those or you’ll compile and test against a vulnerable runtime while production is safe—a subtle drift that bites later.

• Some orchestrators reuse pods aggressively. If you don’t force a rollout, yesterday’s pods run yesterday’s Node. Use deployment annotations to trigger restarts.

• Lambda and other serverless platforms lag minor versions. Vendor the binary in a layer if the managed runtime update lags, or shift to a container runtime where you control the base image.

• If a native addon fails to rebuild, don’t block the whole fleet. Gate that feature behind a flag so you can ship the runtime fix and re‑enable the addon later.

What about performance and regressions?

Security releases can nudge behavior around error handling and DNS. Expect minor shifts rather than dramatic swings. Focus your perf checks on:

• Connection churn (HTTP/2 keep‑alives and ALPN negotiation).

• DNS latency and error rates post c‑ares update.

• Memory trends in long‑lived TLS backends.

If you see spikes, capture diffs between pre‑ and post‑upgrade connection stats and retry budgets. Often the fix is a small timeout or pool size adjustment.

A quick word on process: make this repeatable

The teams that turn incidents like this into non‑events have a playbook they actually practice. We’ve published a fast patch briefing and a ship‑this‑week playbook tailored to this month’s release, including test scripts and rollback gates you can copy into your runbooks.

For a brisk read on impact and urgency, see our fast patch briefing for January 2026. If you need an end‑to‑end runbook with roles, gates, and smoke tests, use the ship‑fast playbook. And for teams that want a tighter QA loop, the test smarter checklist covers HTTP/2, TLS, and AsyncLocalStorage edge cases.

What to do next (dev leads and owners)

• Today: Upgrade to 20.20.0, 22.22.0, 24.13.0, or 25.3.0. Rebuild, redeploy, restart.

• Within 48 hours: Complete staged rollout, validate canary metrics, and close exposure tickets.

• This week: If you’re on 18.x, schedule the migration kickoff. Pick 22.x for a long runway or 24.x to align with the freshest LTS. If you want help, skim our security patch assistance services and get in touch.

• This month: Automate runtime inventory, add a standing chaos test for malformed HTTP/2 headers, and pin Node in every Dockerfile/CI image to avoid drift.

Zooming out

This security release is a reminder that runtime hygiene is part of feature delivery, not a competing priority. The fixes improve how Node behaves under stress and failure—exactly where your software spends its worst hours. Treat the upgrade as maintenance on your airbags and brakes: you’ll barely notice them when things go right, and you’ll be grateful for them when something goes wrong.

Comments

Be the first to comment.