EU AI Act 2026: The Last‑Mile Compliance Playbook

The primary keyword on every CTO and GC’s whiteboard right now is EU AI Act 2026 compliance. If your AI system is on the EU market or its outputs are used in the Union, you’re in scope—regardless of where your company is based. The clock is loud, but you can still ship on time without gutting your roadmap.

What actually changes in 2026?

Two dates define your plan. First, February 2, 2026 was the deadline for the European Commission to provide guidelines on Article 6—the practical classification of high‑risk AI with concrete examples. Second, on August 2, 2026 the bulk of rules kick in, including obligations for many Annex III high‑risk systems, transparency duties, and the expectation that each Member State has at least one operational AI sandbox. Build your schedule around these two milestones. (artificialintelligenceact.eu)

Expect the Article 6 guidance to sharpen the gray areas: when an Annex III use case is high‑risk versus adjacent and non‑high‑risk, plus what “post‑market monitoring” must look like in practice. If the final text in your sector is still evolving, track the Commission’s updates and plan for deltas rather than waiting for perfect clarity. (ai-act-service-desk.ec.europa.eu)

Does the EU AI Act apply to U.S. companies?

Yes. The Act applies extraterritorially to providers and deployers that place systems on the EU market, put them into service in the EU, or whose outputs are used in the EU. That includes SaaS models served from U.S. clouds, APIs, and embedded AI inside products sold into Europe. If European users consume your system’s outputs, assume you’re covered. (skadden.com)

There are narrow carve‑outs (e.g., scientific research and some open‑source scenarios), but don’t assume an exemption if you’re shipping commercially, touching Annex III domains, or triggering transparency obligations (Article 50). Do a quick scoping pass before you bank on any exception. (skadden.com)

EU AI Act 2026 compliance, made practical

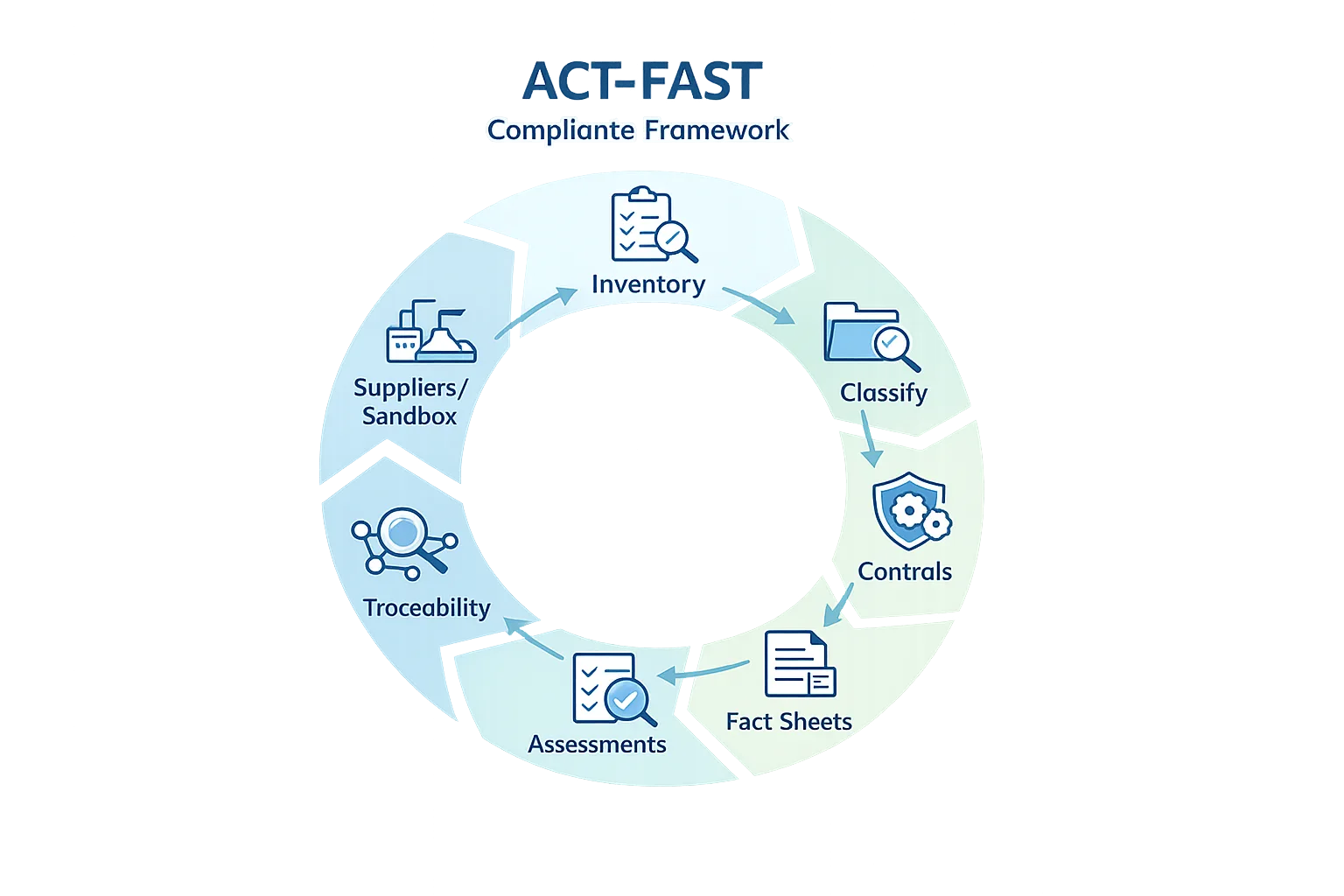

Here’s the thing: heavyweight, policy‑first programs stall product teams. You need a compliance architecture that’s discoverable by auditors and usable by engineers. Use this ACT‑FAST framework we deploy with clients:

A — AI system inventory and data‑flow maps

Build a single system‑of‑record for models, datasets, features, prompts, retrieval sources, third‑party APIs, human‑in‑the‑loop points, and user touchpoints. Map PII flows and where outputs are shown to end users. Treat this as living documentation wired to CI/CD.

C — Classify risk under Article 6

Triage every system against Annex III use cases, with a one‑page justification. If you’re “near‑boundary,” document why it’s not high‑risk and register as required. Create a red‑flag list: biometric identification, employment screening, education and proctoring, access to essential services, and law enforcement contexts. When in doubt, escalate to counsel early. (ai-act-service-desk.ec.europa.eu)

T — Technical and organizational controls

Stand up a lightweight risk management system: attack surface analysis, adversarial and robustness testing, dataset quality checks, concept drift monitoring, and incident response tied to model rollbacks. Add role‑based approvals for high‑risk changes. Keep it in Git—auditors love immutable history.

F — Fact sheets and transparency

Draft model cards and user‑facing disclosures where users directly interact with AI or where content might be AI‑generated. The disclosure should be clear, proximate to the AI experience, and avoid dark patterns. Plan for Article 50 transparency once in force and keep artifacts versioned with releases. (ai-act-service-desk.ec.europa.eu)

A — Assessments and testing

Run scenario‑based evaluations for fairness, safety, and resilience that mirror your real user journeys. Include stress tests for out‑of‑distribution data and prompt abuse. Automate repeatable checks (robustness, data leakage) in CI; schedule deeper quarterly reviews for high‑risk systems.

S — Supplier and sandbox strategy

Lock down your upstream model/API providers with contractual attestations (training data provenance, eval coverage, red‑team results) and secure update channels. If you qualify, enroll in your national AI regulatory sandbox to de‑risk novel approaches with your competent authority before broad launch. (ai-act-service-desk.ec.europa.eu)

T — Traceability and documentation

Keep a change log that ties code commits to risk notes, test evidence, and sign‑offs. Tag “significant changes in design” so you can demonstrate when an existing system crossed the threshold that triggers fresh obligations after August 2, 2026. (artificialintelligenceact.eu)

How to classify faster under Article 6 (with fewer meetings)

Spin up a two‑step screen:

Step 1 — Annex III proximity check. Could your system directly affect an individual’s access to education, jobs, essential services, credit, or involve biometric/emotion recognition? If yes or “maybe,” proceed to Step 2.

Step 2 — Influence and autonomy test. Is the AI output materially steering or replacing a human decision that impacts someone’s rights or livelihoods? Is the human review substantive (not a rubber stamp)? If you’re still uncertain, log the rationale, mark “provisionally high‑risk,” and apply stricter controls pending final guidance. (ai-act-service-desk.ec.europa.eu)

What about foundation models and third‑party APIs?

Rules for general‑purpose AI (GPAI) and governance structures began applying August 2, 2025. If you depend on a provider’s API, request current compliance artifacts: model documentation, usage constraints, copyright and transparency attestations, and their code‑of‑practice posture. Assume you’ll need to surface certain disclosures in your UX and pass through provider limitations—don’t wait for a surprise at audit time. (ai-act-service-desk.ec.europa.eu)

February–August 2026: your sprint plan

Week 1–2 (now): Appoint a single accountable owner (Director+), publish your Annex III watchlist, and finish system inventory. Stand up a compliance branch in your repo with templates for risk logs, test plans, disclosures, and change control.

Week 3–6: Run the Article 6 two‑step screen for every system. High‑risk candidates get a deep dive: risk controls, human‑in‑the‑loop design, transparency copy, and rollback plans. Start pilot testing in a sandboxed cohort in at least one EU market. (ai-act-service-desk.ec.europa.eu)

Week 7–10: Lock supplier attestations, integrate automated robustness tests into CI, and add gated approvals for high‑risk deploys. Complete model cards and link them to release notes.

Week 11–16: Train support and marketing on transparency language. Add UX affordances: explainability snippets, user reporting channels, and opt‑outs where applicable. Prepare post‑market monitoring dashboards and incident drills.

Week 17–24 (through August 2, 2026): Finalize documentation sets per system, rehearse an audit walkthrough, and confirm your Member State sandbox or authority point of contact. Keep a hotfix path for last‑minute guidance changes. (artificialintelligenceact.eu)

People also ask

Do open‑source models keep us out of scope?

Not automatically. Open‑source can be exempt in some cases, but if you place a system on the market, trigger transparency obligations, or touch prohibited/high‑risk areas, obligations follow. Treat “open‑source” as a factor, not a shield. (skadden.com)

What counts as a “significant change” after August 2, 2026?

The Act flags “significant changes in design” as a trigger for obligations on previously placed systems. Use internal criteria: model architecture swaps, major dataset shifts, removal of human review, or new high‑stakes use cases. Document each and re‑run your classification on those events. (artificialintelligenceact.eu)

Should we join an AI regulatory sandbox?

If you’re piloting novel high‑risk functionality or a boundary case, yes. Member States are expected to operate at least one sandbox; use it to validate controls and disclosures with your authority before a wide release. (ai-act-service-desk.ec.europa.eu)

Design patterns that will fail audits

• “Human in the loop” that’s a progress bar—no actual veto power.

• Vague transparency: burying AI notices in a footer or one‑time modal.

• No rollback plan when evals regress under adversarial prompts.

• Static model cards with no link to the running version or release notes.

• Missing supplier attestations for third‑party models/APIs.

• Risk controls only in docs—not enforced in CI/CD.

A lean documentation stack auditors actually like

• System dossier: one page with purpose, users, risks, and controls.

• Data sheet: sources, consent/licensing posture, sampling artifacts.

• Eval pack: fairness, robustness, safety results with thresholds and baselines.

• Incident plan: classification (A/B/C), comms, and rollback runbook.

• Change log: releases with links to diffs, test evidence, and approvals.

Zooming out: why the 2026 timing matters

By August 2, 2026, enforcement ramps and national sandboxes should be operational. If you’ve wired documentation to delivery, transparency to UX, and risk tests to CI, you’ll be ready to show your work—and keep shipping. Teams that wait for “perfect clarity” in guidance typically end up rushing brittle paperwork right before audits. Ship durable systems, not just binders. (ai-act-service-desk.ec.europa.eu)

What to do next

• Start the Article 6 two‑step screen across your portfolio this week.

• Add automated robustness tests and a sign‑off gate to your CI.

• Draft user‑facing transparency copy and run moderated UX tests.

• Lock supplier attestations and align on incident communication.

• If eligible, apply to your Member State’s AI sandbox.

If you want a pragmatic partner, see how we scope and ship compliance‑ready features, explore a services engagement for AI product audits, browse a portfolio of shipped work in regulated spaces, and reach our team to get moving.

Comments

Be the first to comment.