Vercel Pro pricing now revolves around a monthly credit and usage, not a patchwork of fixed allocations. If you saw your Pro plan switch in September and your team started migrating in October–November, you’re not imagining it—this is a real shift. The headline: your Functions on Fluid compute are billed by Active CPU time, provisioned memory, and invocations, and the Pro plan includes a monthly usage credit (not big static buckets). If you rely on Next.js APIs, ISR, or AI workloads, it’s time to re-baseline your budgets.

What changed, exactly (September–November 2025)

On September 9, 2025, Vercel introduced a new Pro plan that replaces numerous included quotas with a monthly usage credit. Viewer seats became free, and key enterprise-grade features like SAML SSO and HIPAA BAAs can be enabled self‑serve. Spend Management is on by default for new Pro teams and rolls out as existing teams switch over.

Earlier, on June 25, Active CPU pricing landed for Fluid compute and became the default model after a redeploy. Function limits were raised the same day: default max duration increased to 300 seconds across plans, with higher ceilings on Pro and Enterprise, and updated instance sizes (for example, Standard at 1 vCPU / 2 GB).

On November 6, Edge Config switched from package-based to per‑unit pricing on Pro (reads and writes billed individually). And Vercel’s docs now make it clear: Pro usage is credit-based—legacy “included” compute allocations you might remember don’t apply under the new Pro plan.

Vercel Pro pricing: how billing now works

For Functions running on Fluid compute, your cost per request is the sum of:

1) Active CPU — the time your code actively executes CPU. Billed per CPU hour and paused during I/O waits. A representative U.S. East region rate is $0.128 per CPU-hour.

2) Provisioned memory — the memory size you select for the instance, billed per GB-hour while the instance is handling requests (even if CPU is waiting). A representative rate is $0.0106 per GB-hour.

3) Invocations — $0.60 per million requests on Pro (billed beyond your monthly credit). These three roll up against your Pro credit first; once that’s exhausted, charges flow on-demand.

Region matters. For example, common U.S. East regions are about $0.128/CPU-hour and $0.0106/GB-hour; San Francisco is higher (about 38% more on both CPU and memory). If your latency SLO allows, choose a cheaper region without hurting user experience.

“Show me the math” (with realistic numbers)

Let’s model two workloads using the representative U.S. East rates above.

Scenario A: API service (spiky traffic)

• Memory: 2 GB Standard instance

• Per request: 0.3s Active CPU, 0.9s total wall time

• Monthly volume: 5,000,000 requests

CPU per request ≈ (0.3/3600) × $0.128 = $0.00001067.

Memory per request ≈ (2 GB × 0.9/3600) × $0.0106 = $0.00000530.

Total per request ≈ $0.0000160. Multiply by 5M → ~$80 in compute. Invocations add ~$3 (5M × $0.60/M). Your Pro credit covers the first $20; you’d see roughly $63 on-demand for this service.

Scenario B: AI agent (bursty, I/O heavy)

• Memory: 2 GB Standard instance

• Per request: 10s Active CPU; 40s wall time (LLM I/O and tool calls)

• Monthly volume: 100,000 requests

CPU per request ≈ (10/3600) × $0.128 = $0.0003556.

Memory per request ≈ (2 × 40/3600) × $0.0106 = $0.0002356.

Total per request ≈ $0.0005912. Multiply by 100k → ~$59.12. Invocations add ~$0.06. Again, the Pro credit reduces the net; the rest bills on demand.

You’ll notice how memory time dominates long wall-time workloads. That’s by design: Active CPU pauses while your code waits on I/O, but memory keeps ticking while the instance is alive. If your function pins 4 GB for 40 seconds, you pay for 4 GB × 40s—even if CPU is idle. That’s the lever to target first.

Will your bill go up or down?

It depends on workload shape and whether you were using the old Pro allocations heavily. Here’s a quick rubric I use with clients:

Most likely to pay less: Long‑lived AI or streaming workloads that used to overpay for idle time. Active CPU charges only for real CPU work. Combine that with in‑function concurrency and you often see double‑digit percentage savings.

Most likely to pay about the same: Typical API endpoints with moderate compute and reasonable memory sizing. Invocations are inexpensive; the variable is memory‑seconds, which you can tune.

At risk of paying more: Apps that lean on high memory settings for convenience (e.g., oversized libraries, large model runners) or that run ISR revalidation in a way that keeps instances alive longer than needed. If you were counting on big “included” Pro buckets, the mental model needs to switch to the monthly credit plus precise usage.

The practical playbook to control costs this week

Here’s the thing—jumping clouds or rewriting infrastructure won’t help if you don’t fix the fundamentals. Do these in order:

1) Confirm your plan and migration status

Check your team’s plan page and billing settings. If you’re on the new Pro plan, you’ll see a monthly usage credit. If you’re not yet migrated, set expectations with stakeholders about when the switch will occur and how it affects forecasts.

2) Enable and tune Spend Management

Spend Management is available on Pro and is enabled by default for newer Pro teams. Set a budget slightly below your comfort threshold. Turn on notifications at 50%, 75%, and 100%, and decide whether you want the hard stop (pause production) at 100%. Add a webhook to notify Slack and trigger a safe fallback (for example, serve static or cached content) if you ever hit the ceiling mid‑month.

3) Pick regions intentionally

U.S. East and many EU regions have lower CPU/GB‑hour rates than West Coast or APAC metros. If your audience is mixed, test from your top markets and pick the cheapest region that still keeps p95 latency competitive. The exact delta can be ~35–40% on CPU and memory between some regions.

4) Right‑size memory before anything else

Start with Standard (1 vCPU / 2 GB). If you bumped memory historically to dodge timeouts, try Active CPU with the new 300s default first. Measure whether your code actually needs more memory for throughput—don’t pay for 4 GB if 2 GB does the job.

5) Reduce wall time: concurrency, batching, and waitUntil

Because memory keeps billing while requests are in flight, you want to spend fewer seconds per instance. Use in‑function concurrency to process multiple sub‑tasks per invocation (queue internally, then await in batches). Offload non‑critical work to waitUntil so the response returns sooner and the instance can pause. For bulk ISR revalidation, batch paths intelligently and stagger jobs to avoid long‑lived instances.

6) Rein in invocations where it’s easy

At $0.60 per million, invocations are cheap—but at very high scale they’re worth optimizing. Coalesce chatty UI requests, implement HTTP caching aggressively, and use incremental adoption of Server Actions where appropriate to cut round trips.

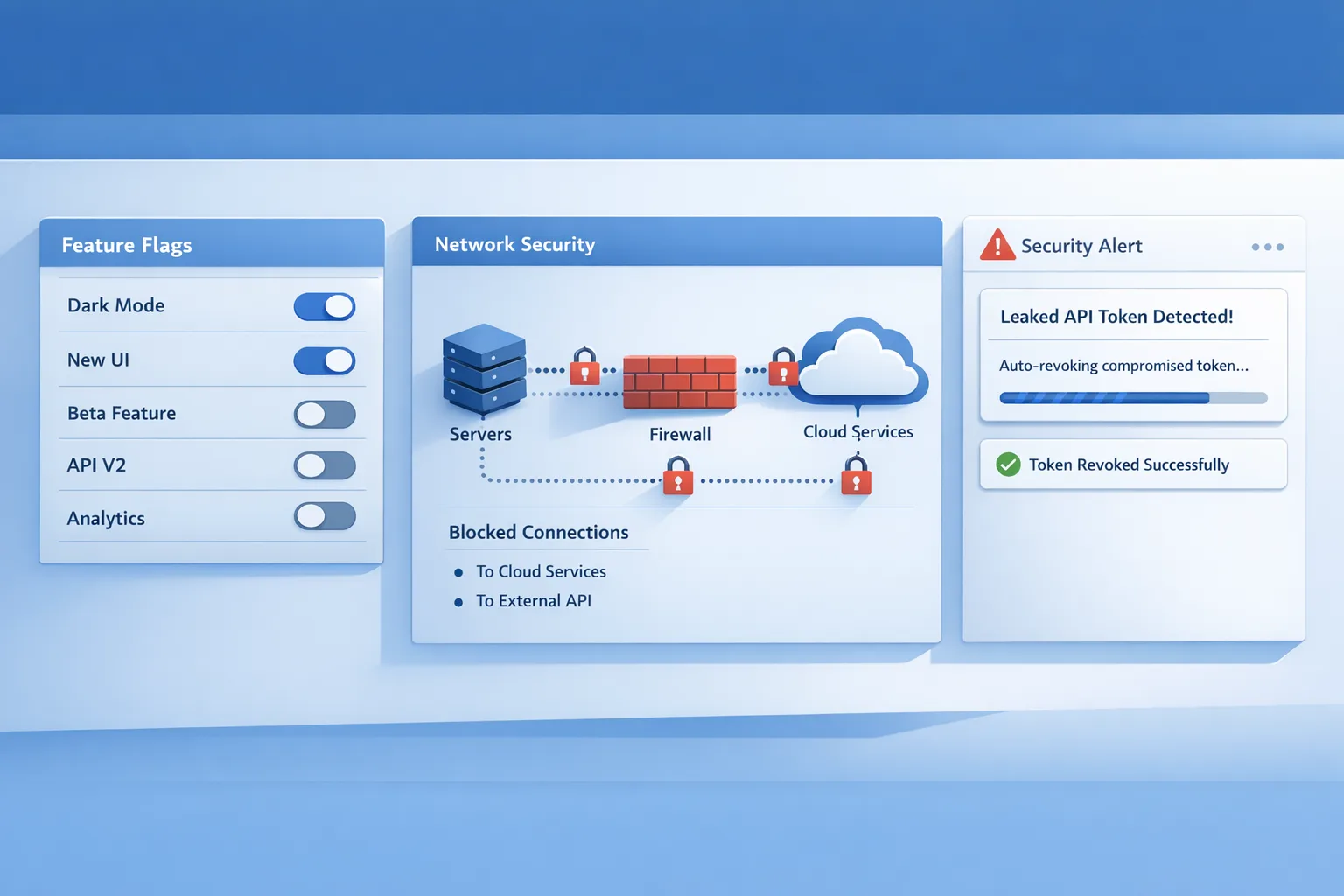

7) Watch Edge Config and images

Edge Config now bills reads and writes per unit on Pro. If you use it for feature flags or routing, sample usage for a week and extrapolate. For image transformations, review transformation counts and cache behavior; move noisy transformations to build time when possible.

A quick calculator you can run today

Grab a single endpoint and compute your real cost with two measurements and one decision:

Measure

• Active CPU per request in seconds (log a monotonic timer around your actual compute)

• Wall time per request (time from entry to response)

• Peak concurrent requests at p95 (from logs or APM)

Decide

• Memory size (start at 2 GB; increase only if you see GC pressure or throughput gains)

Compute cost per request

• CPU: (active_cpu_seconds / 3600) × regional_CPU_rate

• Memory: (memory_GB × wall_seconds / 3600) × regional_GB_hour_rate

• Invocations: requests × $0.60 / 1,000,000 (on Pro)

Run this for your top endpoints. Multiply by monthly volume. Subtract your Pro credit. That’s the bill you should expect. Repeat after you right‑size memory and apply batching or waitUntil; the difference is your savings.

Gotchas most teams hit

“We upgraded memory and it got more expensive without getting faster.” Right—memory is billed continuously per instance while busy. Increase it only when throughput scales or to avoid out‑of‑memory crashes.

“We redeployed and our bill dropped.” That’s expected if you moved from legacy function pricing to Active CPU. Make sure all projects redeploy to pick up the new model consistently.

“Our functions time out at 90s.” The default max duration is now 300s on Pro, with a higher ceiling available. Confirm your project settings; if you previously set shorter timeouts, increase them prudently and track user impact.

“San Francisco region costs more than Virginia?” Yes. If you don’t need West Coast proximity, run U.S. East and keep latency within your SLO by using CDN caching and smart routing.

“Do we still get 1M invocations included on Pro?” Under the new Pro plan, usage is credit‑based rather than fixed invocations or duration buckets. Model invocations at $0.60/M and let the monthly credit absorb the first portion of usage.

When to consider architecture changes

You shouldn’t need a platform swap to get good outcomes, but there are a few honest triggers for change:

• You have a known memory‑bound service that cannot be optimized below 8–16 GB without hurting UX.

• You require specialized accelerators (e.g., GPUs) not available in your target regions.

• Your compliance scope mandates isolated tenancy that doesn’t fit Pro’s self‑serve controls.

Even then, benchmark the savings first; teams often reclaim more by right‑sizing and reducing wall time than by porting.

How this compares to other “pricing pivots”

Providers moving from coarse “included” buckets to usage‑based billing is now the norm. We’ve covered similar shifts elsewhere, including container CPU pricing updates and how to respond quickly. If you’d like a deeper playbook for spotting and fixing cost regressions after a provider change, read our take on what to fix when pricing flips overnight and how to cut CPU costs immediately by switching models.

Migration checklist (copy/paste)

Use this as your one‑pager with the team:

1) Confirm plan and credit in Billing → Pro plan page.

2) Enable Spend Management; set budget and alerts; wire a webhook to Slack.

3) Choose the lowest‑cost region that meets your p95 latency target.

4) Redeploy to ensure Active CPU pricing is active on all projects.

5) Set Functions to Standard 2 GB; raise only if throughput improves.

6) Move non‑critical work to waitUntil; batch ISR revalidation.

7) Implement in‑function concurrency; cap to safe levels from load tests.

8) Sample Edge Config reads/writes and estimate monthly cost.

9) Run the quick calculator on top endpoints; track the before/after.

10) Document SLOs and a runbook for when Spend Management triggers.

What about runtimes and upgrades?

If you’re upgrading Node.js to match platform defaults or chasing performance, bookmark our Node.js 25 upgrade playbook and the guide on native TypeScript support in Node.js. Keeping your runtime modern helps with startup time, memory, and bundle size—all tied to cost on Fluid compute.

What to do next (developers)

• Instrument: log Active CPU seconds and wall seconds per request.

• Reduce: drop memory to 2 GB where possible; batch internal work; use waitUntil.

• Regionalize: move to a cheaper region if p95 holds.

• Re‑test: run k6 or Artillery with real traffic shapes; compare bills week‑over‑week.

What to do next (business owners)

• Budget guardrails: set Spend Management thresholds today.

• Forecast: have engineering provide per‑endpoint cost estimates using the calculator here.

• Review: track Edge Config, images, and analytics event volumes monthly.

• Ask for a second look: if you want outside help tuning costs, see what we do for platform audits and reach out via our contact form.

The bottom line

Pricing flips are rarely fun, but this one is workable. The new Pro model rewards workloads that keep CPU busy and wall time low. If you right‑size memory, shorten request lifetimes, and let concurrency do its job, your bill stabilizes—and often goes down. When in doubt, run the math on your top endpoints, treat region as a first‑class knob, and keep Spend Management tight. If you want a seasoned second set of eyes, browse our recent work and blog to see how we approach cost and performance in the real world.

Comments

Be the first to comment.